Deep learning and microfluidics: a review

Author

Francesca Romana Brugnoli, PhD

Publication Date

February 24, 2021

Keywords

biological analysis

deep learning

Organ-on-a-chip

neural networks

AI-based microfluidics

Need advice about deep learning microfluidics?

Your microfluidic SME partner for Horizon Europe

We take care of microfluidic engineering, work on valorization and optimize the proposal with you

Introduction to deep learning techniques for microfluidics

The unique features of microfluidics-based devices brought tremendous advancements in many different applications, including experimental biology and biomedical research.

Nevertheless, the full potential of this technology has not yet been reached. The high amount of data generated by the high-throughput systems needs to be analyzed as efficiently as it is generated.

Deep learning architectures for biological analysis

Other deep learning neural networks can handle sequential data, for example, data generated by microfluidic devices. These networks are called recurrent neural networks (RNNs). They can be further divided based on their output: sequence-to-unstructured data architectures produce a single production after receiving a sequential input.

A sequence is a vector where the order of elements is essential (e.g., a sequence or image), whereas, for unstructured data, the ordering of components is unnecessary (e.g., a vector of cell traits – width, length, etc.). In the case of sequence-to-unstructured architectures, the training is achieved by a technique known as back-propagation through time [11]. This can be applied, for example, to characterize a microfluidic soft sensor and address its limitations, such as its nonlinearity and hysteresis in response [14].

Das et al. applied deep learning in the calibration stage to estimate a contact pressure’s magnitude and location simultaneously [14]. They fabricated two sensors to acquire data; one had a single straight microchannel with three different cross-sectional areas in three segments, and the other had a single-sized microchannel with three patterns in different locations.

The experiments were carried out by compressing the top surface with different speeds and pressures in more places of the sensor. The RNN algorithm, composed of modular networks, can model the nonlinear characteristic with a hysteresis of the pressure response and find the pressure’s location.

On the other hand, sequence-to-sequence neural networks offer sequence data as an output. DNA base calling is an example: the MinION nanopore sequencing platform is a high-throughput DNA sequencer that allows analyzing the data in real time as they are produced [15].

Applications that benefit from this type of neural network are those where accuracy can be increased by considering previous measurements, like the growth of a cell via volume [1] or mass [16]. For example, every pulse amplitude corresponds to the passage of a cell; this means that each element of the input sequence is annotated.

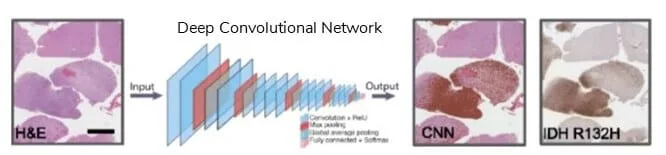

Images can be analyzed using deep learning networks with spatially distributed data. This further improves cell classification and could be done directly without requiring prior manual trait extraction. Neural networks used to process images are called convolutional neural networks (CNNs).

The element used to analyze the image is the convolutional block, which can be described as a filter that slides along the image, outputs the weighted sum of pixel values for that filter within the region of the image processed, and applies a nonlinear transformation.

These convolutional layers extract the most dominant values in the feature maps [19]. One example is using a deep-learning CNN to classify a binary population of lymphocytes and red blood cells at high throughput [8].

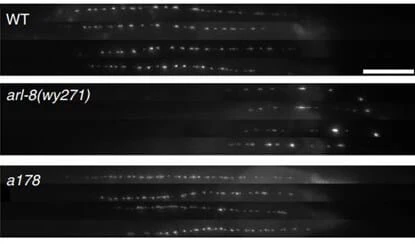

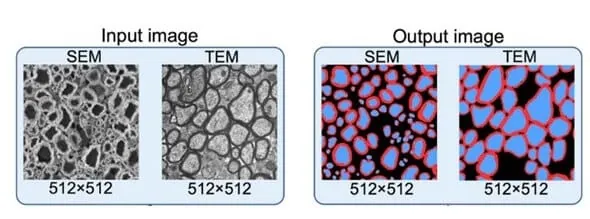

Image-to-image neural networks are used in many applications, but segmenting images is one growing area of interest due to the possibility of generating thoroughly segmented images starting from cell contours.

For example, the nerve cell segmentation application aims to map each pixel in the input image to one of many classes in the corpus [21]. Nerve cell images are segmented into regions marking axon (blue), myelin (red), and background (black) [20].

Video processing and deep learning

These different approaches can be used together to analyze videos; for example, Buggenthin and colleagues used a combination of RNN and CNN to identify hematopoietic lineage; in fact, they could predict the differentiation of cells before they expressed conventional molecular markers.

The first step concerns extracting features from bright-field images by applying a CNN; the RNN then processes these data to track information in time by considering previous frames.

Organ-on-a-chip (OOC) and AI-autonomous living systems with deep-learning networks

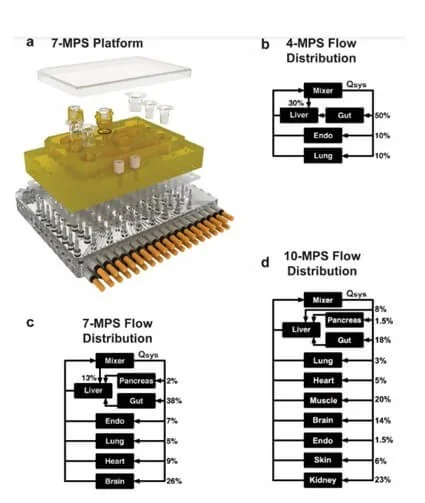

Deep learning algorithms could significantly impact more complex systems like Organ-on-chip. OOC are 3D microfluidic devices that can reproduce tissues or entire organs and study their activity and environment.

As OOC devices continue to evolve, a high number of data will be fed to deep learning networks from images and videos of tissues and organs development in their in vitro environments; moreover, large portions of tissue and organs could be analyzed to detect spatial heterogeneity like modern histopathology analysis [26].

CNNs have been used to organize and classify histomorphological information [27] and could also be used to analyze fluorescence microscopic images of tissue cultures on chips.

Deep learning for experimental design and control

Deep learning networks could be implemented in the emerging field of multiorgan systems to monitor individual organs, assess their communication, and provide real-time control of multiple OOC systems; very interestingly, this could also lead to a multiorgan system that can regulate itself [28].

Cloud-based deep learning

Conclusions

Review done thanks to the support of the NeuroTrans

H2020-MSCA-ITN-2019-Action “Innovative Training Networks”, Grant agreement number: 860954

Author: Francesca Romana Brugnoli, PhD

Contact: Partnership[at]microfluidic.fr

References

- Riordon, J. et al. (2014) Quantifying the volume of single cells continuously using a microfluidic pressure-driven trap with media exchange. Biomicrofluidics 8, 011101

- Ko, J. et al. (2018) Machine learning to detect signatures of disease in liquid biopsies – a user’s guide. Lab Chip 18, 395–405

- Guo, B. et al. (2017) High-throughput, label-free, single-cell, microalgal lipid screening by machine-learning-equipped optofluidic time-stretch quantitative phase microscopy. Cytometry A 91, 494–502

- Ko, J. et al. (2017) Combining machine learning and nanofluidic technology to diagnose pancreatic cancer using exosomes. ACS Nano 11, 11182–11193

- Singh, D.K. et al. (2017) Label-free, high-throughput holographic screening and enumeration of tumor cells in blood. Lab Chip 17, 2920–2932

- Mahdi, Y. and Daoud, K. (2017) Microdroplet size prediction in microfluidic systems via artificial neural network modeling for water-in-oil emulsion formulation. J. Dispers. Sci. Technol. 38, 1501–1508

- Chen, C.L. et al. (2016) Deep learning in label-free cell classification. Sci. Rep. 6, 21471

- Heo, Y.J. et al. (2017) Real-time image processing for microscopy-based label-free imaging flow cytometry in a microfluidic chip. Sci. Rep. 7, 21471

- Van Valen, D.A. et al. (2016) Deep learning automates the quantitative analysis of individual cells in live-cell imaging experiments. PLoS Comput. Biol. 12, e1005177

- Gopakumar, G. et al. (2017) Cytopathological image analysis using deep-learning networks in microfluidic microscopy. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 34, 111

- Das, D.K. et al. (2013) Machine learning approach for automated screening of malaria parasite using light microscopic images. Micron 45, 97–106

- San-Miguel, A. et al. (2016) Deep phenotyping unveils hidden traits and genetic relations in subtle mutants. Nat. Commun. 7, 12990

- Vasilevich, A.S. et al. (2017) How not to drown in data: a guide for biomaterial engineers. Trends Biotechnol. 35, 743–755

- Han, S. et al. (2018) Use of deep learning for characterization of microfluidic soft sensors. IEEE Robot. Autom. Lett. 3, 873–880

- Boža, V. et al. (2017) DeepNano: deep recurrent neural networks for base calling in MinION nanopore reads. PLoS One 12, e0178751

- Godin, M. et al. (2010) Using buoyant mass to measure the growth of single cells. Nat. Methods 7, 387–390

- Jozefowicz, R. et al. (2016) Exploring the limits of language modeling. arXiv

- Qu, Y.-H. et al. (2017) On the prediction of DNA-binding proteins only from primary sequences: a deep learning approach. PLoS One 12, e0188129

- LeCun, Y. and Bengio, Y. (1995) Convolutional networks for images, speech, and time series. Handb. Brain Theory Neural Netw. 3361, 1995

- Zaimi, A. et al. (2018) AxonDeepSeg: automatic axon and myelin segmentation from microscopy data using convolutional neural networks. Sci. Rep. 8, 3816

- Long, J. et al. (2015) Fully convolutional networks for semantic segmentation. In The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3431–3440, IEEE

- Lin, G. et al. (2016) Efficient piecewise training of deep structured models for semantic segmentation. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3194–3203, IEEE

- Chen, L.-C. et al. (2016) DeepLab: semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected CRFs. arXiv

- Buggenthin, F. et al. (2017) Prospective identification of hematopoietic lineage choice by deep learning. Nat. Methods 14, 403– 406

- Yu, H. et al. (2018) Phenotypic antimicrobial susceptibility testing with deep learning video microscopy. Anal. Chem. 90, 6314– 6322

- Lu, C. et al. (2017) An oral cavity squamous cell carcinoma quantitative histomorphometric-based image classifier of nuclear morphology can risk stratify patients for disease-specific survival. Mod. Pathol. 30, 1655–1665

- Faust, K. et al. (2018) Visualizing histopathologic deep learning classification and anomaly detection using nonlinear feature space dimensionality reduction. BMC Bioinform. 19, 173

- Edington, C.D. et al. (2018) Interconnected microphysiological systems for quantitative biology and pharmacology studies. Sci. Rep. 8, 4530

- Nguyen, B. et al. (2018) A platform for high-throughput assessments of environmental multistressors. Adv. Sci. (Weinh.) 5, 1700677

- Pandey, C.M. et al. (2017) Microfluidics based point-of-care diagnostics. J. 13, 1700047

Check the other Reviews

FAQ - Deep learning and microfluidics: a review

How is deep learning associated with microfluidics?

Deep learning offers artificial intelligence-based approaches to this data that microfluidic high-throughput systems produce in high volume. This combination allows the efficient processing of complex biological and biomedical data of microfluidic devices to speed up research in disease detection, cell classification, as well as tissue analysis.

What are the primary categories of deep learning designs on microfluidics?

The main architectures are unstructured data-to-unstructured data networks to perform simple classification, recurrent neural network to analyze sequential data, convolutional neural network to analyze images and image-to-image networks to perform tasks such as cell segmentation. Every architecture is chosen according to the type of data and the nature of analysis.

What is the way deep learning can do label-free cell classification?

Deep learning networks are able to label and categorize cells without the use of fluorescent tags and other markers. The simplest is with cell traits or traits such as circularity and perimeter as inputs, the more detailed with convolutional neural networks going directly to raw microscopy images, automatically identifying features of interest to classify better.

What are recurrent neural networks and what can they be used to do in microfluidics?

Recurrent neural networks are used with data that is sequential and the order of objects counts, e.g. time-series measurements taken in microfluidic devices. They have been used to describe microfluidic soft sensors, to compensate nonlinearity and hysteresis in pressure responses as well as to analyze over time the measurements of cell growth with better accuracy by using prior measurements.

What happens to microfluidic imaging data when it is fed to convolutional neural networks?

Convolutional neural networks apply filters which slide over the images extracting dominant features using multiple layers. They can be used in microfluidics to allow high-throughput cell classification directly based on microscopy images, and without feature extraction by hand. They can be used in sorting a population of blood cells and breaking down images of nerve cells into separate tissue elements.

How does the use of deep learning in organ-on-chip systems work?

The images and videos of organ-on-chip devices are analyzed with deep learning algorithms, which allows the identification of spatial heterogeneity in tissues, identifies histomorphological information, and tracks organ development. The future could see real-time control of multiorgan systems, which can self-regulate on an algorithmic analysis.

Deep learning and microfluidics: Can deep learning aid in experimental design?

Yes, deep learning networks can operate complex parallelized experiments, including thousands of microwell cultures by deciding when to inject, and by what. They can also aid the planning and analysis of post-experiments of researches where more than one environmental factor such as temperature, light, nutrient supply was tested in the growth of microalgae.

Cloud-based deep learning of microfluidics?

Deep learning implemented on clouds combines worldwide distributed data of microfluidic diagnostic tools, including paper-based tests. With the help of feeding these networks with international data, it is possible to track the disease outbreaks, predict patterns, and even contain epidemics due to a centralized process of point-of-care diagnostics and food safety testing.

What are the real obstacles to the application of deep learning in microfluidics laboratories?

The integration is not associated with high challenges and costs. The principal conditions are availability of the adequate computational facilities, data on training algorithms development, and skills in microfluidics and machine learning. Most applications have an opportunity to use available frameworks and need a small amount of extra infrastructure.

What will the future trends of implementing deep learning with microfluidics be?

The future developments that are expected are self-regulating autonomous living systems driven by real-time analysis, better high-throughput diagnostics with real-time data interpretation, extended organ-on-chip platforms with predictive capabilities, and globally connected diagnostic network to monitor the health of the population. The technology is still developing to open entirely automated systems of experiments with minimum human interference.