AI-based microfluidic: Intelligence in every drop

Author

Celeste Chidiac, PhD

Publication Date

Keywords

Drug Discovery

biomedical research

AI-based microfluidics

Machine/Deep learning

Need advice for your AI-based microfluidics?

Your microfluidic SME partner for Horizon Europe

We take care of microfluidic engineering, work on valorization and optimize the proposal with you

Microfluidics has revolutionized biomedical research by enabling precise manipulation of small fluid volumes on miniaturized platforms. These lab-on-a-chip devices have significantly contributed to diagnostics, drug discovery, and synthetic biology. However, integrating artificial intelligence (AI) is taking microfluidics to the next level, transforming them from passive fluid control devices into intelligent platforms capable of real-time decision-making. AI-based microfluidic systems are paving the way for faster, more efficient, and highly scalable scientific and medical applications.

The evolution of AI-based microfluidics

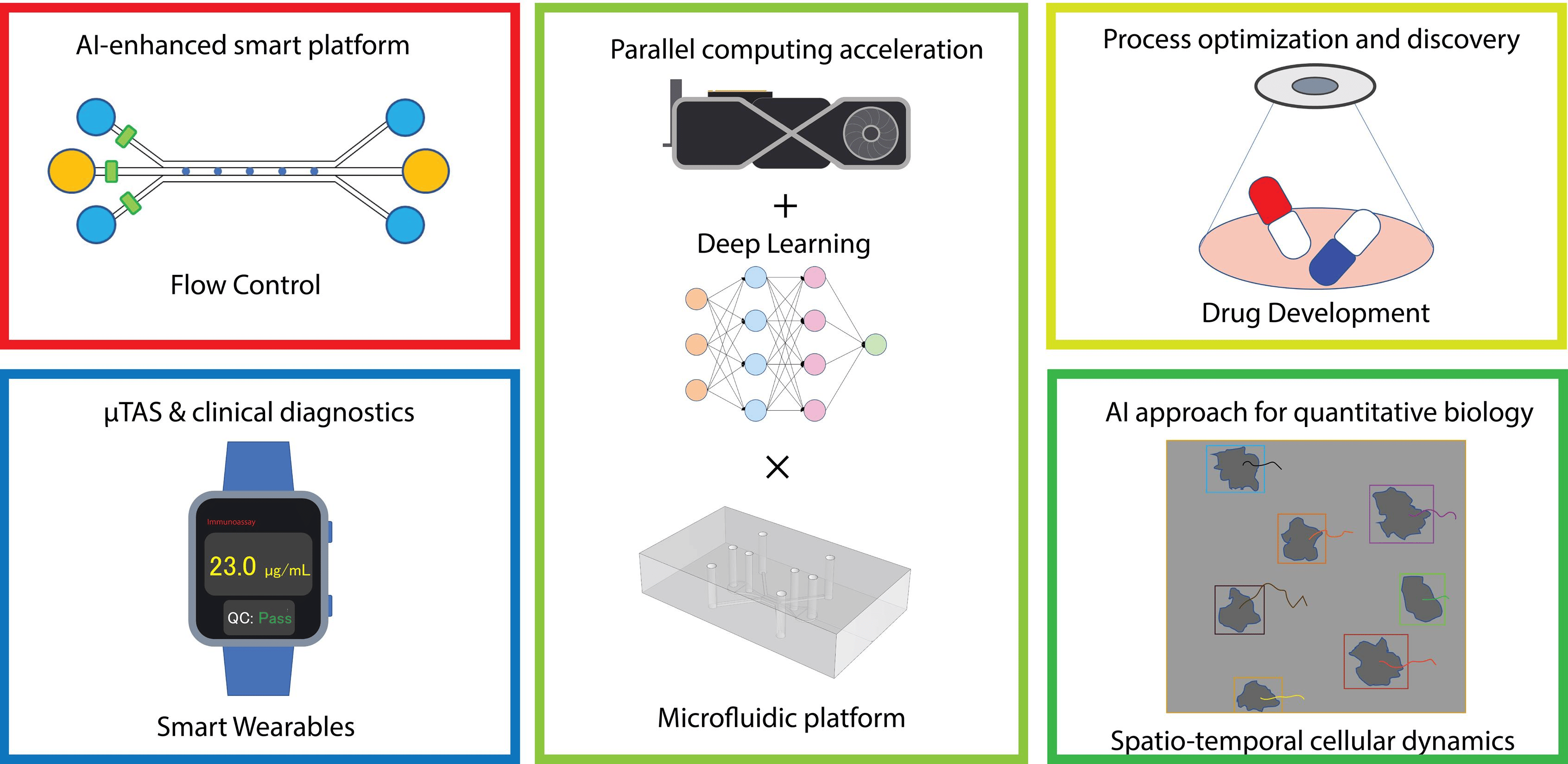

Traditionally, microfluidic devices were designed to passively manipulate fluids, relying on pre-set configurations and manual control. While these systems allowed for miniaturization and high-throughput testing, their capabilities were limited by static workflows and the need for human intervention. Introducing AI, particularly machine learning (ML) and deep learning (DL), has changed this paradigm. AI-based microfluidic systems are able to learn from experimental data, optimize fluid flow dynamics, automate processes, analyze complex biological and chemical data, and adapt to changing conditions without direct human control (Figure 1).

Applications of AI-based microfluidics

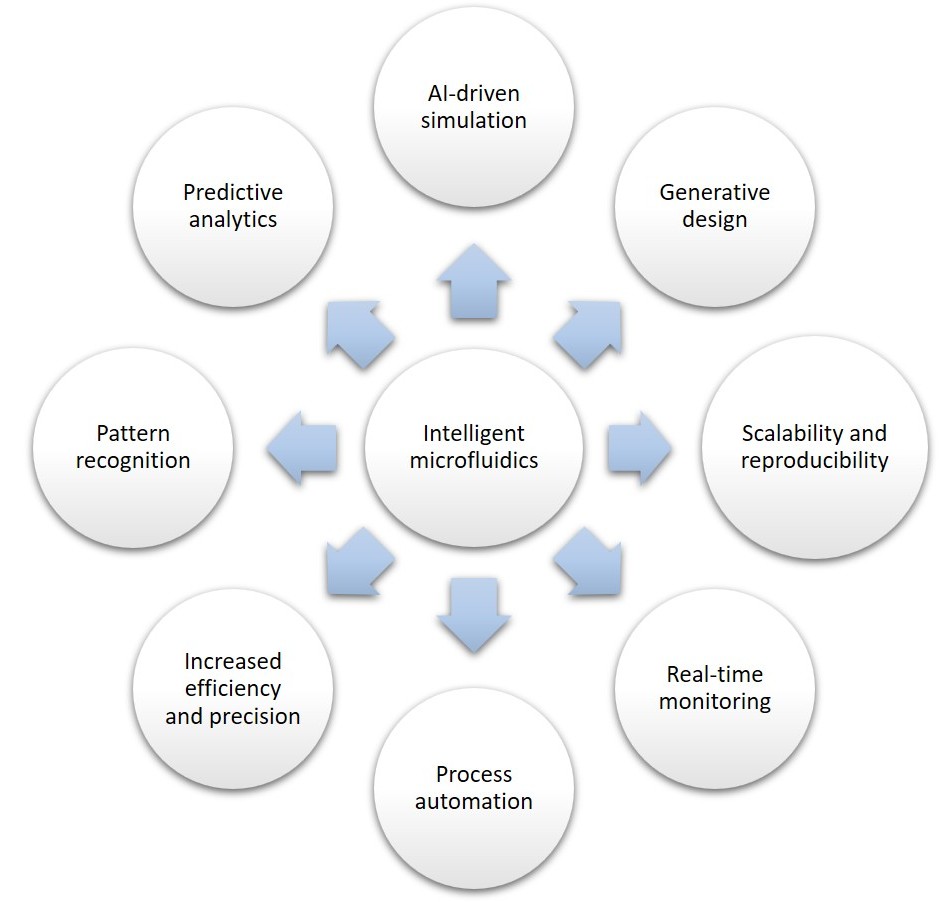

AI-based microfluidics is making an impact in several key areas (Figure 2):

- Flow control – Traditional microfluidic systems rely on precise manipulation of small fluid volumes, often requiring intricate control mechanisms. AI-powered flow control enhances the accuracy and adaptability of these platforms by dynamically adjusting flow rates in real-time, optimizing processes such as chemical reactions, drug screening, and cell sorting.

- Parallel computing – DL models can analyze large datasets generated by microfluidic experiments in real-time. AI can process images, detect patterns, and optimize experiments at unprecedented speeds. This capability is particularly beneficial for applications such as high-throughput screening, single-cell analysis, and lab-on-a-chip technologies, where vast amounts of data are generated and require rapid processing.

- Point-of-care diagnostics – AI-based microfluidics enables the development of smart wearables that provide real-time health monitoring. For example, microfluidic biosensors embedded in wearable devices can analyze sweat, blood, or interstitial fluids to detect biomarkers related to diseases or metabolic conditions. These systems can even detect diseases early and predict their progression, enabling personalized medicine.

- Drug development – Traditional drug development is time-consuming and resource-intensive, often involving extensive trial-and-error processes. AI-based microfluidic platforms can streamline drug screening by predicting molecular interactions and evaluating drug efficacy, significantly reducing development time and costs. AI also aids in analyzing vast datasets to identify promising drug candidates.

- Quantitative biology – AI-powered image processing and pattern recognition techniques allow researchers to track and analyze cellular behavior over time with high precision. This is particularly valuable for understanding complex biological processes such as cell migration, differentiation, and interactions in microfluidic environments.

Challenges and future directions

Despite its promise, AI-based microfluidics faces challenges, including the need for explainable AI models, high-quality training datasets, and seamless data integration. Many AI models function as “black boxes,” making their decision-making processes difficult to interpret. This lack of transparency can be a concern in medical and regulatory applications. Researchers are actively working on improving AI model interpretability and developing robust validation frameworks to ensure reliability.

Looking ahead, intelligent microfluidics is set to revolutionize fields like healthcare, synthetic biology, and environmental monitoring. As AI algorithms become more advanced and data collection methods improve, we can expect even greater automation, precision, and accessibility in microfluidic applications. The synergy between AI and microfluidics is not just enhancing current capabilities, it is redefining the future of scientific discovery and medical diagnostics.

Microfluidics is not just micro - it’s intelligent!

The integration of AI into microfluidics marks a major leap forward in lab-on-a-chip technology. With the ability to automate processes, optimize experiments, and provide real-time insights, AI-based microfluidics is set to play a crucial role in the future of healthcare and research. As advancements continue, we can anticipate even smarter, more adaptive systems that will push the boundaries of what’s possible in diagnostics, drug discovery, and personalized medicine. The future of microfluidics is not just micro, it’s intelligent.

Want to know more on this subject? Check the extended review about AI use in microfluidic systems.

Funding and Support

This mini review was written under the European Union’s Horizon research and innovation program under HORIZON-HLTH-2024-TOOL-05-two-stage, grant agreement No. 101155875 (NAP4DIVE)

This review was written by Celeste Chidiac, PhD.

Published in March 2025.

Contact: Partnership[at]microfluidic.fr

References

- Tsai, H.F., S. Podder, and P.Y. Chen, Microsystem Advances through Integration with Artificial Intelligence. Micromachines (Basel), 2023. 14(4).

Check the other Reviews!

FAQ - AI-based microfluidic: Intelligence in every drop

In what areas does AI have a quantifiable impact on droplet microfluidics?

There are two locations with steadily earned gains. Online vision eliminates hand QC and enables higher throughput; the reduction in bad runs in labs is 10-40%. In large-scale systems, reinforcement learning can stabilize emulsions subjected to perturbations that cause immediate rupture by rejecting bad droplets as they occur. And Bayesian optimization of design-of-experiments can often achieve a target size or monodispersity 3-7x faster than grid search and with half to two-thirds fewer trials. In large-scale systems, reinforcement learning can stabilize emulsions subjected to perturbations that cause instant rupture within a few minutes.

Would I need deep learning for simple measurements, such as droplet size and droplet spacing?

Not necessarily. Classical image processing (thresholding + contour fitting) can operate on clean, high-contrast channels at kilohertz rates on a small CPU. Deep models are worth their weight in cases of poor contrast, when droplets are deformed, or when they need to differentiate multi-phase content (cells, beads, double emulsions).

How do we handle biological outputs, such as single-cell tests, CRISPR-based experiments, or tracking secreted molecules?

What sets modern systems apart is their ability to respond quickly to fine biological changes. Detection of subtle shape differences or fluorescent signals in individual cells now occurs faster, keeping pace with flow rates up to tens of thousands per second. Instead of relying on static cutoffs, droplet-based secretion tests use real-time ratio measurements and automatic adjustments, reducing false hits by about a quarter. When editing genes with CRISPR, smart algorithms identify effective electric pulses that save energy, skipping the need to test every possible setting.

We also have concerns over the size of the data and traceability. What should we do about the pipeline’s structure?

Please do not act on the chip; rather, treat it as an instrumented experiment. Record three parallel streams (i) raw or lightly compressed frames, (ii) extracted features and model scores, and (iii) actuator commands with timestamps. Represent the store layouts as timed recipes (flow rates, valve timings, illumination), and link the store model weights to each run. A simple schema: run_id, recipe_id, model_id, and git commit, will avert 90% of reproducibility headaches. To store it, edge ring buffers store only the final N minutes whilst writing curated features to disk; they can only reduce datasets by 100x.

Is it possible to operate this directly on-site, rather than relying on a full computer near the microscope?

Here’s how it operates. On the edge, a compact GPU or NPU speeds up model predictions; meanwhile, microcontrollers manage precise timing for fluid actuators. Timing constraints break down this way: camera data moves in 1 to 3 milliseconds, analysis takes 5 to 15 milliseconds, then commands go out within 1 to 5 milliseconds – all far under the typical 50 to 100 millisecond reaction time of droplet behavior. For applications needing faster response – like electro-wetting or dielectrophoresis – an FPGA handles real-time feedback, guided by broader directives from a supervisory system.

What is the measurement of a good enough performance without gaming the measures?

Choose metrics related to the tasks and report the uncertainty. In data visualization, intersection-over-union is the segmentation method; the error distribution is reported as counts and sizes rather than a single mean. To control: settling time following a step disturbance, overshoot, and the percent of time within specifications. To be optimized: sample effectiveness (trials to hit target) and inter-day, inter-device, inter-operator robustness. Also, make sure to check for calibration drift. Almost all microfluidic image pipelines will drift by a few percent after a multi-hour-long run unless you re-anchor your microfluidic now and then.

Why do problems usually appear when teams include artificial intelligence in a microfluidic system?

A single rhythm shows up again and again. One flaw lies in the training material: images taken under perfect conditions crack when faced with actual hardware, where specks, glare, or uneven light shift everything. Tweak inputs wildly, using shifts in contrast, smudges, twists, then rebuild models starting from early run records. Another delay hides in feedback loops – spot-on forecasts mean little if signals crawl through talkative USB layers, arriving at fluid controllers hundreds of milliseconds too late. Optimizing droplet size without considering surfactant behavior often leads to visually appealing results but weak chemical performance. Focusing solely on shape misses key functional outcomes. Instead, link goals directly to how well assays perform later in the process. What matters most is not appearance, yet accuracy in application.

What does this mean for Horizon Europe projects?

Credible automation, combined with measurable outcomes, catches evaluators’ attention. Seriousness comes through when a microfluidic component includes AI-driven tuning, clear uncertainty bounds, along with predefined testing using masked data and multi-site replication. Proposals featuring a small company dedicated to microfluidics and lab automation often gain stronger marks for execution and real-world use. The Microfluidics Innovation Center, acting as such a specialist, develops chip layouts, integrates sensors and controls, configures onboard computing, and then supplies fully documented prototype versions. Including MIC in submissions appears to nearly double success rates compared to standard project chances.

Does the MIC support more than hardware, i.e., in analytics and workflow?

Yes. We provide end-to-end solutions: chip and fabrication, illumination and camera geometry, closed-loop control, and notebooks with trained models. We also co-write the proposal (work plans, TRLs, risks, KPIs) and harmonize the exploitation so that AI components can be transferred to partners cleanly.